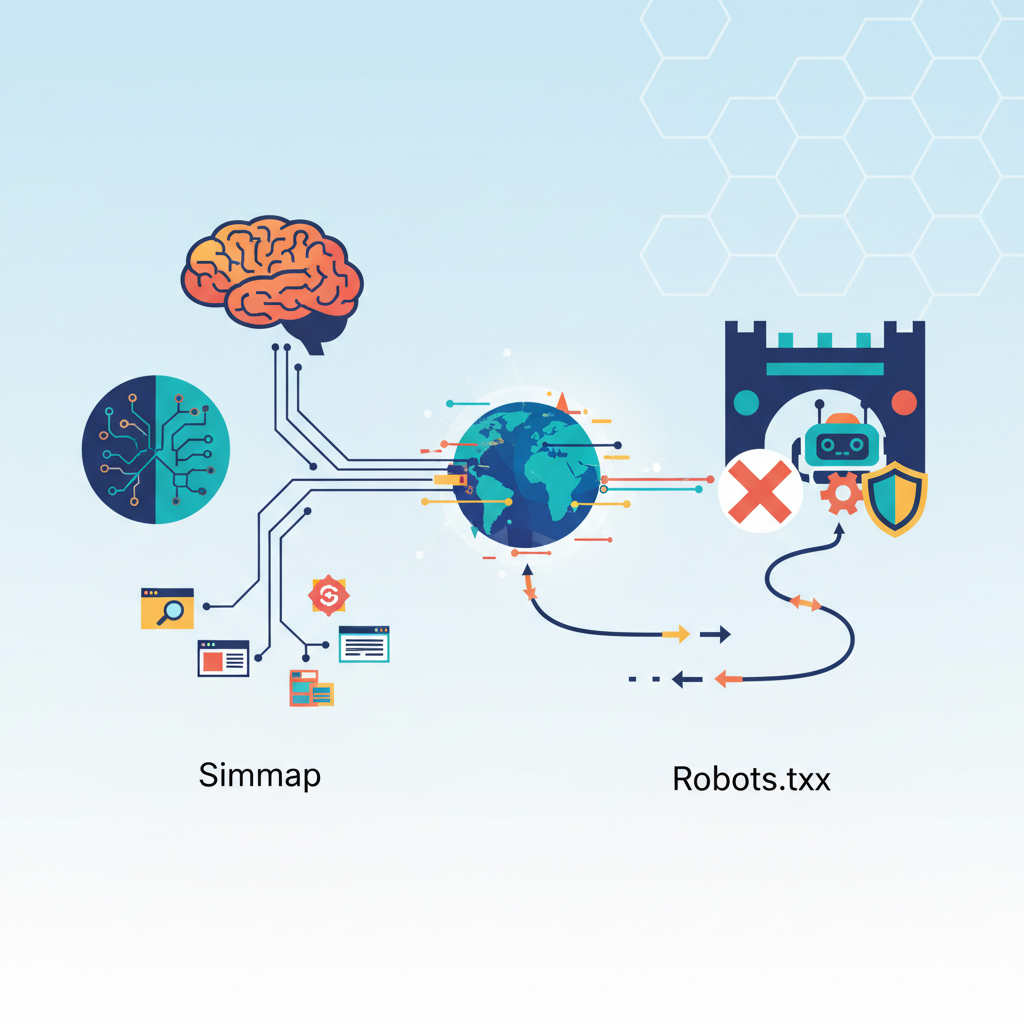

The digital landscape is constantly evolving, and for website owners aiming for top search engine rankings, understanding Sitemap và Robots.txt is paramount. This guide delves into the best practices for these crucial SEO elements, projecting their role in the ever-changing world of 2025 and beyond. We’ll cover everything from their creation to troubleshooting common issues, ensuring your website is optimally indexed and visible to search engines.

5 mẹo giúp bạn viết Thẻ Meta & Title hấp dẫn hơn bao giờ hết

Understanding Sitemaps: The Foundation of SEO

A sitemap, essentially a “map” of your website’s content, acts as a guide for search engine crawlers like Googlebot. It lists all the important pages on your site, allowing search engines to efficiently discover and index your content. Effective Sitemap SEO is key for achieving high rankings. In 2025, we can expect sitemaps to play an even more critical role, especially with the continued rise of AI-powered search and the increasing importance of structured data. Imagine a future where highly detailed, dynamically updated XML Sitemaps are essential, not only for standard pages but also for rich media like 3D models or interactive web-based games.

Mastering Your Website's Visibility: A Guide to Sitemap & Robots.txt

Types of Sitemaps and Their Uses

Sitemaps come in different formats, each serving a specific purpose:

| Sitemap Type | Description | Best Use |

|---|---|---|

| XML Sitemap | Standard format supported by major search engines. | All website types, including e-commerce and blogs. |

| HTML Sitemap | Human-readable version, beneficial for site navigation and user experience. | Primarily for usability, complements XML sitemap. |

| Image Sitemap | Specifically for images, detailing file URLs and captions. | For websites with a large number of images, especially e-commerce |

| Video Sitemap | Focuses on video content, including video titles, descriptions, and thumbnails. | For video-rich platforms like YouTube or Vimeo-integrated sites. |

Mastering Robots.txt: Controlling Search Engine Crawlers

Robots.txt acts as a gatekeeper, instructing search engine crawlers which parts of your website to crawl and which to ignore. Proper use of Robots.txt is crucial for managing your website’s SEO and preventing unwanted indexing. In 2025, advanced Robots.txt directives could redefine how search engines interact with dynamic content and AI-generated pages, offering greater control over the information shared publicly. For instance, you could selectively allow access to certain game levels only after a player has completed a particular challenge, preserving gameplay surprises.

Common Mistakes to Avoid with Robots.txt

- Blocking essential pages: Accidentally blocking crucial pages from being indexed can severely impact SEO.

- Incorrect syntax: Even a small error in your

Robots.txtfile can prevent crawlers from accessing your website correctly. - Over-blocking: Restricting access to too much content can hinder search engine discoverability.

Optimizing Sitemap and Robots.txt for Enhanced SEO: A Synergistic Approach

The most effective SEO strategy involves a coordinated approach using both Sitemap and Robots.txt. A well-structured sitemap complements a carefully crafted Robots.txt, allowing search engines to access and index the most valuable content. This synergistic approach will become even more important in 2025 as search engine algorithms become more sophisticated.

Creating an Effective Sitemap

Following these steps ensures a high-performing sitemap:

- Choose the right format: Select the appropriate sitemap format based on your website’s content.

- Generate your sitemap: Numerous tools exist to generate sitemaps automatically, especially for platforms such as WordPress.

- Submit your sitemap to search engines: This enables faster and more efficient indexing of your content.

Actionable Steps: Optimizing Your Website for 2025

By understanding and implementing these best practices for Sitemap and Robots.txt, you can significantly improve your website’s SEO performance. This translates to better search engine rankings, increased visibility, and ultimately, higher traffic. Remember to regularly review and update your sitemap and Robots.txt file as your website evolves. This process is ongoing, not a one-time fix.

People Also Ask: Sitemap and Robots.txt FAQs

What is a sitemap? A sitemap is a file that lists all the important pages on your website, making it easier for search engines to find and index your content.

What is Robots.txt?

Robots.txt is a file that tells search engine crawlers which pages they should or shouldn’t index.

How do I create an XML sitemap? Many online tools and plugins (like those for WordPress) can automatically generate XML sitemaps.

How do I check my Robots.txt file?

Simply type yourwebsite.com/robots.txt into your browser’s address bar.

Is a sitemap necessary for SEO? While not strictly required, a well-structured sitemap significantly improves the chances of your website being thoroughly indexed.

Does Robots.txt affect SEO?

Yes, improperly configured Robots.txt can prevent search engines from accessing and indexing important pages, harming your SEO.

What kind of sitemap should I use? Start with an XML sitemap. If your site has many images or videos, consider image or video sitemaps as well.

How do I submit my sitemap to Google? Use Google Search Console to submit your sitemap for efficient indexing.

Key Takeaway: Mastering

Sitemap và Robots.txtis not just about technical proficiency; it’s about strategically guiding search engines to your valuable content, ensuring your website is readily discoverable in the ever-competitive digital landscape of 2025 and beyond. This requires ongoing monitoring and adaptation to maintain optimalChỉ số hóa trang web.

Optimizing Your Web Presence: Sitemap and Robots.txt Strategies for 2025 and Beyond

Leave a Reply